とあるWEBサイトの脆弱性検査を依頼されたので方法を色々と探ってみたところ、「skipfish」というツールが良さそうだったので使ってみました。インストールから実行結果の表示までを備忘録として残します。

「skipfish」とは

Googleがオープンソースで公開しているWEBサイトの脆弱性検査ツールです。

本家サイトは以下のURLです。

https://code.google.com/p/skipfish/

ソースコードをtgzで固めて公開されているので自分でコンパイルする必要があります。私はWindows環境で使用したかったので、cygwinから操作しました。

インストール方法は以下の通りです。

$ wget https://skipfish.googlecode.com/files/skipfish-2.10b.tgz --no-check-certificate

--2013-12-10 01:30:39-- https://skipfish.googlecode.com/files/skipfish-2.10b.tgz

skipfish.googlecode.com (skipfish.googlecode.com) をDNSに問いあわせています... 173.194.72.82

skipfish.googlecode.com (skipfish.googlecode.com)|173.194.72.82|:443 に接続しています... 接続しました。

警告: `skipfish.googlecode.com' の証明書は信用されません。

警告: `skipfish.googlecode.com' の証明書の発行者が不明です。

HTTP による接続要求を送信しました、応答を待っています... 200 OK

長さ: 244528 (239K) [application/x-gzip]

`skipfish-2.10b.tgz' に保存中

100%[======================================================================================================================================================>] 244,528 741K/s 時間 0.3s

2013-12-10 01:30:40 (741 KB/s) - `skipfish-2.10b.tgz' へ保存完了 [244528/244528]

$ tar xvzf skipfish-2.10b.tgz

skipfish-2.10b/

skipfish-2.10b/assets/

skipfish-2.10b/assets/p_serv.png

skipfish-2.10b/assets/i_high.png

skipfish-2.10b/assets/n_expanded.png

skipfish-2.10b/assets/i_note.png

skipfish-2.10b/assets/n_missing.png

skipfish-2.10b/assets/n_clone.png

skipfish-2.10b/assets/n_failed.png

skipfish-2.10b/assets/n_unlinked.png

skipfish-2.10b/assets/n_collapsed.png

skipfish-2.10b/assets/n_children.png

skipfish-2.10b/assets/p_file.png

skipfish-2.10b/assets/COPYING

skipfish-2.10b/assets/i_low.png

skipfish-2.10b/assets/p_pinfo.png

skipfish-2.10b/assets/p_dir.png

skipfish-2.10b/assets/n_maybe_missing.png

skipfish-2.10b/assets/p_value.png

skipfish-2.10b/assets/p_unknown.png

skipfish-2.10b/assets/i_medium.png

skipfish-2.10b/assets/i_warn.png

skipfish-2.10b/assets/p_param.png

skipfish-2.10b/assets/sf_name.png

skipfish-2.10b/assets/mime_entry.png

skipfish-2.10b/assets/index.html

skipfish-2.10b/Makefile

skipfish-2.10b/tools/

skipfish-2.10b/tools/sfscandiff

skipfish-2.10b/README

skipfish-2.10b/doc/

skipfish-2.10b/doc/authentication.txt

skipfish-2.10b/doc/signatures.txt

skipfish-2.10b/doc/dictionaries.txt

skipfish-2.10b/doc/skipfish.1

skipfish-2.10b/ChangeLog

skipfish-2.10b/signatures/

skipfish-2.10b/signatures/signatures.conf

skipfish-2.10b/signatures/files.sigs

skipfish-2.10b/signatures/messages.sigs

skipfish-2.10b/signatures/context.sigs

skipfish-2.10b/signatures/mime.sigs

skipfish-2.10b/signatures/apps.sigs

skipfish-2.10b/dictionaries/

skipfish-2.10b/dictionaries/extensions-only.wl

skipfish-2.10b/dictionaries/medium.wl

skipfish-2.10b/dictionaries/minimal.wl

skipfish-2.10b/dictionaries/complete.wl

skipfish-2.10b/COPYING

skipfish-2.10b/config/

skipfish-2.10b/config/example.conf

skipfish-2.10b/src/

skipfish-2.10b/src/debug.h

skipfish-2.10b/src/report.h

skipfish-2.10b/src/report.c

skipfish-2.10b/src/analysis.c

skipfish-2.10b/src/analysis.h

skipfish-2.10b/src/signatures.c

skipfish-2.10b/src/auth.c

skipfish-2.10b/src/types.h

skipfish-2.10b/src/same_test.c

skipfish-2.10b/src/checks.h

skipfish-2.10b/src/crawler.c

skipfish-2.10b/src/http_client.c

skipfish-2.10b/src/skipfish.c

skipfish-2.10b/src/options.h

skipfish-2.10b/src/auth.h

skipfish-2.10b/src/alloc-inl.h

skipfish-2.10b/src/signatures.h

skipfish-2.10b/src/options.c

skipfish-2.10b/src/string-inl.h

skipfish-2.10b/src/http_client.h

skipfish-2.10b/src/crawler.h

skipfish-2.10b/src/checks.c

skipfish-2.10b/src/database.c

skipfish-2.10b/src/config.h

skipfish-2.10b/src/database.h

$ cd skipfish-2.10b/

$ make

cc -L/usr/local/lib/ -L/opt/local/lib src/skipfish.c -o skipfish \

-O3 -Wno-format -Wall -funsigned-char -g -ggdb -I/usr/local/include/ -I/opt/local/include/ -DVERSION=\"2.10b\" src/http_client.c src/database.c src/crawler.c src/analysis.c src/report.c src/checks.c src/signatures.c src/auth.c src/options.c -lcrypto -lssl -lidn -lz -lpcre

See doc/dictionaries.txt to pick a dictionary for the tool.

Having problems with your scans? Be sure to visit:

http://code.google.com/p/skipfish/wiki/KnownIssues

以下のコマンドでヘルプを表示できます。

$ ./skipfish --help skipfish web application scanner - version 2.10b Usage: ./skipfish [ options ... ] -W wordlist -o output_dir start_url [ start_url2 ... ] Authentication and access options: -A user:pass - use specified HTTP authentication credentials -F host=IP - pretend that 'host' resolves to 'IP' -C name=val - append a custom cookie to all requests -H name=val - append a custom HTTP header to all requests -b (i|f|p) - use headers consistent with MSIE / Firefox / iPhone -N - do not accept any new cookies --auth-form url - form authentication URL --auth-user user - form authentication user --auth-pass pass - form authentication password --auth-verify-url - URL for in-session detection Crawl scope options: -d max_depth - maximum crawl tree depth (16) -c max_child - maximum children to index per node (512) -x max_desc - maximum descendants to index per branch (8192) -r r_limit - max total number of requests to send (100000000) -p crawl% - node and link crawl probability (100%) -q hex - repeat probabilistic scan with given seed -I string - only follow URLs matching 'string' -X string - exclude URLs matching 'string' -K string - do not fuzz parameters named 'string' -D domain - crawl cross-site links to another domain -B domain - trust, but do not crawl, another domain -Z - do not descend into 5xx locations -O - do not submit any forms -P - do not parse HTML, etc, to find new links Reporting options: -o dir - write output to specified directory (required) -M - log warnings about mixed content / non-SSL passwords -E - log all HTTP/1.0 / HTTP/1.1 caching intent mismatches -U - log all external URLs and e-mails seen -Q - completely suppress duplicate nodes in reports -u - be quiet, disable realtime progress stats -v - enable runtime logging (to stderr) Dictionary management options: -W wordlist - use a specified read-write wordlist (required) -S wordlist - load a supplemental read-only wordlist -L - do not auto-learn new keywords for the site -Y - do not fuzz extensions in directory brute-force -R age - purge words hit more than 'age' scans ago -T name=val - add new form auto-fill rule -G max_guess - maximum number of keyword guesses to keep (256) -z sigfile - load signatures from this file Performance settings: -g max_conn - max simultaneous TCP connections, global (40) -m host_conn - max simultaneous connections, per target IP (10) -f max_fail - max number of consecutive HTTP errors (100) -t req_tmout - total request response timeout (20 s) -w rw_tmout - individual network I/O timeout (10 s) -i idle_tmout - timeout on idle HTTP connections (10 s) -s s_limit - response size limit (400000 B) -e - do not keep binary responses for reporting Other settings: -l max_req - max requests per second (0.000000) -k duration - stop scanning after the given duration h:m:s --config file - load the specified configuration file Send comments and complaints to .

脆弱性チェックは対象サイトへの再帰的クロールと辞書ベースでのアクセスにより行われます。辞書は標準で4種類用意されていました。私は「complete.wl」を使用したのですが、これが一番チェック項目が多いものと思われます。

$ cd ~/skipfish-2.10b/dictionaries $ ls -l 合計 112 -r--r----- 1 ******** None 35048 12月 4 2012 complete.wl -r--r----- 1 ******** None 1333 12月 4 2012 extensions-only.wl -r--r----- 1 ******** None 34194 12月 4 2012 medium.wl -r--r----- 1 ******** None 34194 12月 4 2012 minimal.wl

それでは、ヘルプの内容に従ってコマンドを実行してみます。

(実際に脆弱性をチェックしていた時のログを取り損ねたので、下記はlocalhostへ対して一番小さい辞書を使って実行した時のログです)

$ ./skipfish -o ~/report -S dictionaries/minimal.wl http://localhost:8080/local/ skipfish web application scanner - version 2.10b Welcome to skipfish. Here are some useful tips: 1) To abort the scan at any time, press Ctrl-C. A partial report will be written to the specified location. To view a list of currently scanned URLs, you can press space at any time during the scan. 2) Watch the number requests per second shown on the main screen. If this figure drops below 100-200, the scan will likely take a very long time. 3) The scanner does not auto-limit the scope of the scan; on complex sites, you may need to specify locations to exclude, or limit brute-force steps. 4) There are several new releases of the scanner every month. If you run into trouble, check for a newer version first, let the author know next. More info: http://code.google.com/p/skipfish/wiki/KnownIssues NOTE: The scanner is currently configured for directory brute-force attacks, and will make about 65130 requests per every fuzzable location. If this is not what you wanted, stop now and consult the documentation. Press any key to continue (or wait 60 seconds)...

画面の指示に従って60秒待つか何かキーを押せばいよいよ開始です。

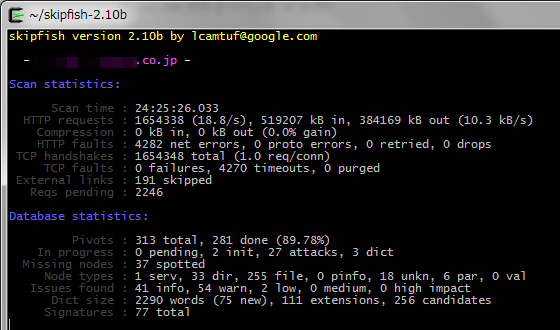

skipfish version 2.10b by lcamtuf@google.com

- localhost -

Scan statistics:

Scan time : 0:01:41.274

HTTP requests : 213500 (2108.1/s), 238310 kB in, 40454 kB out (2752.6 kB/s)

Compression : 0 kB in, 0 kB out (0.0% gain)

HTTP faults : 0 net errors, 0 proto errors, 0 retried, 0 drops

TCP handshakes : 2178 total (98.0 req/conn)

TCP faults : 0 failures, 0 timeouts, 6 purged

External links : 0 skipped

Reqs pending : 15

Database statistics:

Pivots : 7 total, 7 done (100.00%)

In progress : 0 pending, 0 init, 0 attacks, 0 dict

Missing nodes : 0 spotted

Node types : 1 serv, 5 dir, 1 file, 0 pinfo, 0 unkn, 0 par, 0 val

Issues found : 6 info, 2 warn, 0 low, 0 medium, 0 high impact

Dict size : 2172 words (1 new), 30 extensions, 23 candidates

Signatures : 77 total

[+] Copying static resources...

[+] Sorting and annotating crawl nodes: 7

[+] Looking for duplicate entries: 7

[+] Counting unique nodes: 6

[+] Saving pivot data for third-party tools...

[+] Writing scan description...

[+] Writing crawl tree: 7

[+] Generating summary views...

[+] Report saved to '/home/********/report/index.html' [0xf294c43e].

[+] This was a great day for science!

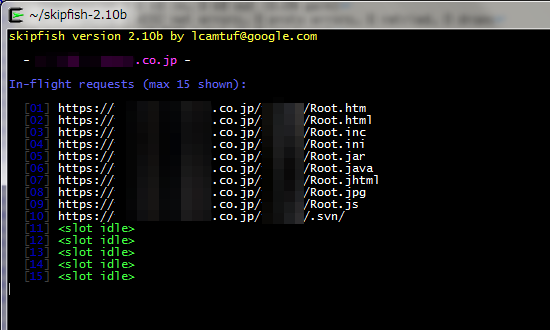

実行中にスペースキーを押すと、現在リクエストを投げているURLが見られます。

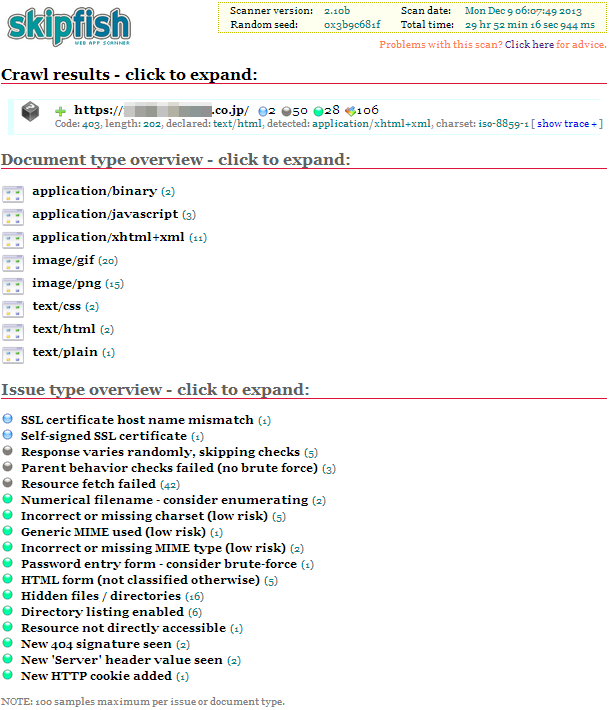

実行が全て完了すると、以下のようなHTMLベースのレポートが作成されます。

注意事項としては、以下のような進捗状況と思われる表示があるのですが、このtotalという所の数字がどんどん増えていきます。

Pivots : 326 total, 294 done (90.18%)

ですので、現在の進捗状況と経過時間だけ見ても、完了時刻の予想ができません。実際私が試した時には30時間近く完了を待ってみましたが、終わりませんでした。ちなみに途中で切り上げたい場合は Ctrl+c でそれまでの情報をもとにレポートが作成されます。たぶん、できるだけ速く通信できる環境からテストしたほうが良かったんだと思います。(私は依頼されたサーバに対するテストだったため、「個人PC→(無線LAN)→ルータ→(インターネット(光))→クラウド上のサーバ」の経路でした)

あと、一秒当たり500~2,000件ものリクエストを発行するようなので、対象URLの指定には最大限の注意を払ってください。